Ant Group implements Chinese semiconductor components to develop artificial intelligence models through training while minimizing its need for restricted US software technology, according to people with knowledge of the situation.

Alibaba-owned Ant Group employed domestic supplier chips related to its parent Alibaba and Huawei Technologies to train their huge language models through the Mixture of Experts (MoE) methodology. Independent sources indicated that the obtained outcomes matched those achieved by Nvidia H800 devices. Some sources indicate Ant maintains its usage of Nvidia chips for AI development yet explains the firm shifts toward AMD and Chinese manufacturers for its new model developments.

Ant Group’s MoE Breakthrough: Lowering AI Training Costs Amid Global Hardware Challenges

The adoption of the Mixture of Experts methodology by Ant indicates its increasing importance in the developing AI competition between domestic Chinese and international tech firms while still seeking budget-friendly model training solutions. Chinese firms achieve export restriction circumvention by testing homegrown hardware technology because the high-end H800 GPU from Nvidia remains a key hardware that Chinese organizations still need to acquire.

In published literature, Ant demonstrates how its developed models outperformed Meta’s during specific testing. Bloomberg News has failed to independently validate the company results after first reporting the news. If the reported results are accurate, then Ant’s development marks progress in Chinese efforts to both cut down AI application expenses and decrease dependence on international hardware systems.

The MoE model uses diverse components to process smaller data segments, becoming an important AI research topic for scientists and researchers. The startup DeepSeek, along with Google, employs this methodology in its operations. A MoE model functions like an expert team where different members handle specific task sections to boost the model creation process efficiency. Ant has not provided remarks regarding its relation with its hardware supply chains.

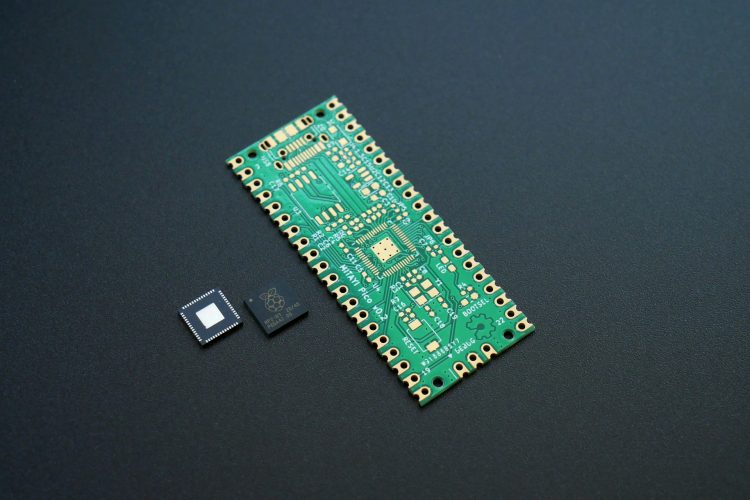

The training of MoE models requires high-performance GPUs, but these devices remain too expensive for smaller companies to adopt and utilize. Ant researched to lower the expenses associated with training processes. To achieve its specific goal, the paper adds this objective at the end of its title: Scaling Models “without premium GPUs.” [our quotation marks]

Ant’s choice of direction, along with its MoE method to decrease training expense, represents an opposite strategy from Nvidia’s strategy. CEO Officer Jensen Huang predicts that computing power requirements will expand as DeepSeek’s R1 market launches despite its efficient features. He believes companies will purchase better chips to fuel revenue enhancements instead of going for cost reductions through less expensive alternatives. Nvidia actively develops GPUs which incorporate increasing quantities of cores as well as transistors and memory storage capacity.

The Ant Group research shows that the trillion tokens’ training process with standard high-performance hardware required an investment of 6.35 million yuan (equivalent to $880,000). The company decreased its training expenses to 5.1 million yuan by using less expensive semiconductor chips.

The company Ant declared it would implement its Ling-Plus and Ling-Lite models for industrial AI applications in healthcare sectors and finance domains. As part of expanding its healthcare ambitions, Ant acquired the Chinese online medical platform Haodf.com during the first half of the year. Tony operates a range of AI services through Zhixiaobao for virtual assistant support as well as Maxiaocai for financial advisory assistance.

The basis for significant AI advancement resides in finding the weaknesses of master-level kung fu fighters, notes Robin Yu of Shengshang Tech, the Beijing-based AI firm.

Ant has released all of its models under open-source licensing. The ling-lite model contains parameters set to 16.8 billion, whereas the ling-plus model has its parameters set to 290 billion. The estimated parameter count for closed-source GPT-4.5 rests at 1.8 trillion per MIT Technology Review.

The paper from Ant indicates that model training activities still present significant difficulties. Changes made to hardware components alongside model development structures throughout training sessions frequently led to erratic model performances that produced error rate surges.